To Mock or not to Mock, that is the question...

Hi there, after an overview of the new performance game in one of my previous posts, I got caught in my thoughts and one thing did not leave my mind: mocks.

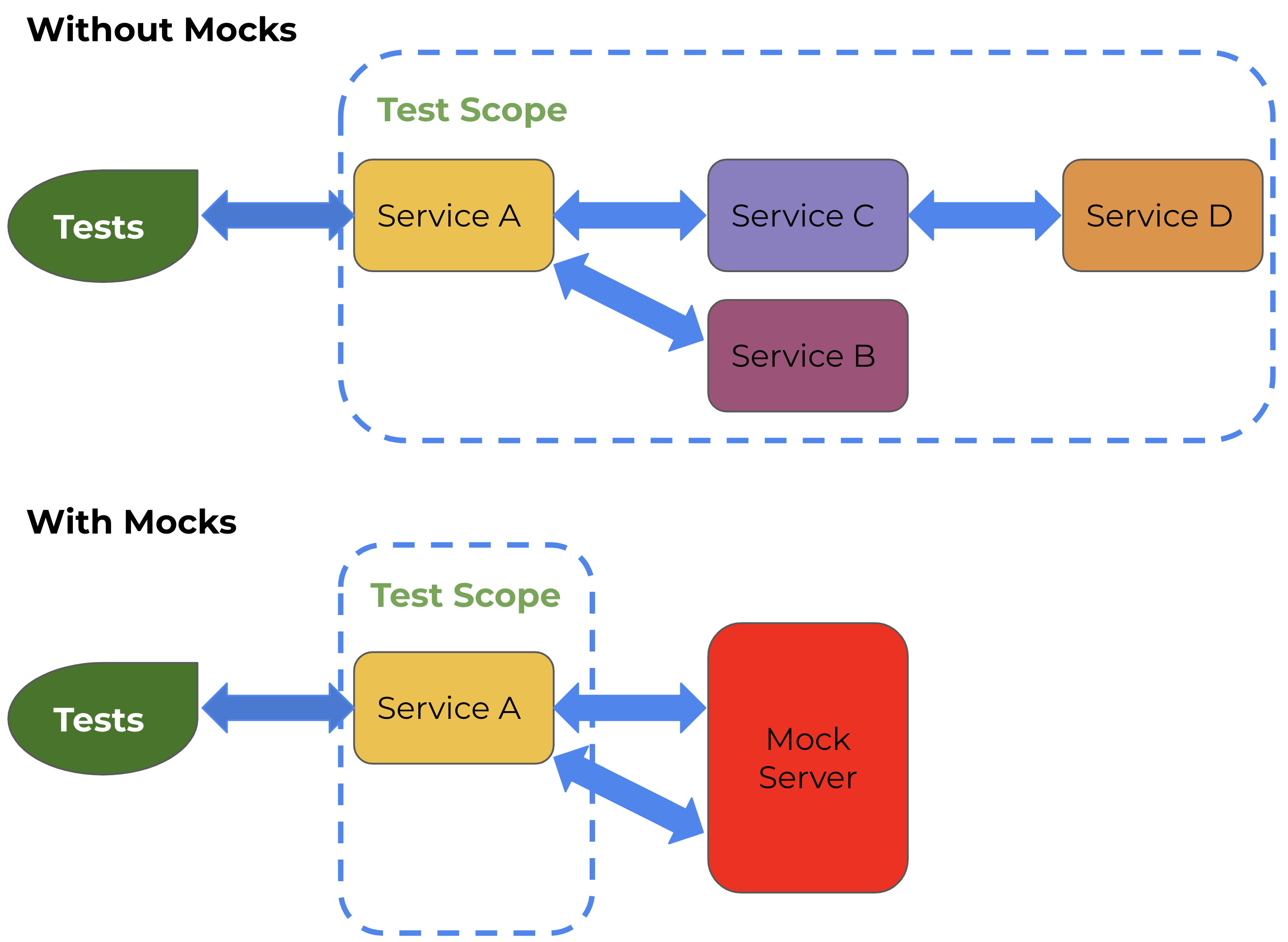

For the ones that have never heard about it this concept appear often associated to unit testing (there is even a discussion about the differences between stub and mock that I will not go into here https://martinfowler.com/articles/mocksArentStubs.html ), and consist in the ability to mimic a service/API to behave in a controlled way. The key here is control, if you can control the surrounding services of your application generating the exact scenarios that you want to validate, you can emulate different scenarios in an independent way.

You will not burn down the office to validate that the fire extinguisher are working correctly, instead you will push a test button.

In this case it is the same, why do you want to have enormous environments with complicated data to perform tests in your application? Or instead of focusing on testing your service/application you end up testing the dependencies instead.

Why mocking?

Before getting into the whys, let me just reference that there are some Test Data Management Solutions appearing that will allow you to inject data into different environments, fetching it from production (with all the cautions that we need to have like masking) or generating synthetic data. But if you can control the services in front of the databases, why complicate things?

Using mocks will allow you to focus on your application/service when testing and remove all that instability generated by other services or environments. In my experience it is very hard to have a working environment with proper data to validate something, either because the services are not working properly, data is not clean because everyone access it and change it, the incorrect versions are installed, other services that our application depend are overloaded and we notice slowness in our application, all these difficulties are not under our control because they are caused by external dependencies we have in our application.

In my opinion you should have mocks somewhere in your test strategy but if you do not have it let me enumerate at least five different situations to convince you that you should consider mocking.

1- Simulation of failure and slowness

Let me start with this one as it is the one that is the most forgotten of the five. Without mocks, how will you simulate a failure of your app or see how the application behaves if a dependency fails (by returning a failure not just cut the connection) or simulate a slow connection to see if our application behaves as we expect. Well without mocks we can’t…

Majority of mock solutions have the possibility to enable a proxy that will stand in the middle of communications and can record interactions with the application. This is handy when we do not know how to define the expectations from the start, it will help by recording the messages exchanged between the applications. When acting as “the man in the middle” it can define some latency between requests or define error codes to be returned.

These abilities open new possibilities in testing, we can now target these situations that we could not before.

2- Performance & virtualization

Regarding performance, mocks are vital if you want to focus on your application/service and not in other dependencies. Without mocks you are not 100% sure that the lack of performance is due to the application you are validating or some underlying service. Using mocks and mocking all the dependencies, you are refocusing the validations on your application only. In an environment where all dependencies are mocked, we will not have any delay or unexpected error, the conditions will be optimal and the focus will be on your application.

Some of you may say that we are hiding problems by using this approach, but in fact we are focusing on our application and making sure that it behaves as we expect in all the conditions we wanted to validate. When moving to integrated tests (because we still need those but in less number) you can have confidence that your application behaves as expected for several situations.

Mock testing is as good as the emulation situation you define but this is not an issue of the approach but of the tester.

The responsibility is high as all scenarios must be thought off from the start to cover all possible scenarios. This implies defining expectations that will emulate all situations we wish to validate.

In this section we cannot forget mobile applications, usually mobile applications are based over API calls, so it is the perfect scenario to mock all of those dependencies and make those validations a lot faster and stable.

3- Data setup

One of the most challenging aspects of testing is to have the right data to simulate the behaviors we want to test. Some companies are using Test Data Management (TDM) tools that have the ability to fetch data from production (masking the necessary data) or to generate synthetic data based on the database schemas of production. These solutions are often complicated to have working and risky as they will access production data. In reality most of the data of companies that have grown from being a start up to a big company will have some issue regarding data, either it is not following all best practices or have special behaviors implemented that are hard to generalize.

Also, nowadays applications do not live off data only anymore, most of (micro) services also have a dependency in messaging systems like Kafka to support geo distribution for example, where applications are no more just generating data but reacting to messages exchanged to generate data.

So why relaying in TDM tools when we can simulate the applications above them? In theory we do not need to control data if we are controlling the answers applications and services return. By defining expectations we are simulating behaviors, workflows and all the underlying data.

4- Assisted development

Imagine that in your company you have multiple (micro)services that interact with one another, but, as we all know, their development may not be aligned. This means that you can have the development of one service starting before and we still do not have a service deployed to validate against. Again here mocks are handy, it is also a suggestion for future use as you will see below, if all services start by developing a mock service before the actual development with templates of expectations that other teams can use to define their own expectations you create independences of teams and services without risking the misalignment of the services.

5- Independence of external services (such as payment APIs)

You probably have an external dependency somewhere, if you are using a payment integration or integrating with an authentication mechanism, my point is why reinvent the wheel if you can use an external service that will return what you need? This is true but introduces an external dependency in your workflows/environments, this means that there is a call in your workflow that you cannot control because it is external to your company.

Some latency can also be added by performing requests to an external service or timeouts reached that will make your tests fail for the wrong reasons (do not get me wrong, these situations are valuable to be identified but in the context of these validations).

If this is the case you can have uncontrolled instability in your tests that is not ideal, to solve this you can also use mocks that will emulate the external behavior with certainty that it will not fail and delay the tests.

What to use? How does it works? Time for the Lab

When searching for the right tool for your purpose you need to know how you want to use it? That will determine what is the best tool for you and your team. There are two main usages that you can go for:

- Use it programmatically

- Use it as a standalone

Two of the most used tools are Wiremock and MockServer, both of those will allow you to adjust their usage to your needs, for the purpose of this blog entry I will use Wiremock and demonstrate possible usages of the tool. Before diving into the demonstration I just want to highlight the possibility that this tool has to be used as a proxy that will record the communications and generate the expectations or stub mappings while doing so. This is handy if you do not have a clue on the messages exchanged between the applications, with the proxy approach it will record all interactions and generate the stub mappings for you, then it is just on you to customize those to your needs.

The examples I will provide are simple ones that will define a stub for an endpoint and call that endpoint, in most cases we are mocking dependencies of the application we are testing. This means that I will exercise the application I want to test and that application is the one that will call the mocked dependency.

With these tools most of the time all that you can do programmatically you can also define in a standalone experience, but let’s start with an example of usage with the programmatic approach.

Programatically

In this approach I will use Maven and Junit 5 (Jupiter) to define the tests and do the validations. This way of doing things is closer to the unit test approach as we are defining the setup and usage of Wiremock in the code of the tests.

The first thing is to create a new instance of Wiremock and define some configuration parameters:

@RegisterExtension

WireMockExtension wm = WireMockExtension.newInstance()

.options(wireMockConfig().dynamicPort().dynamicHttpsPort())

.configureStaticDsl(true)

.proxyMode(true)

.build();

We are using @RegisterExtension to allow multiple wiremock instances, also this provides full control over the configuration. Resuming we are creating a new instance by setting a dynamic port, we also have defined in the extensions the Wiremock to behave as a static one (WireMock server will start before the first test method in the test class and stop after the last method has completed). The last extension activates the proxy mode of the server to allow multi-domain mocking.

These are simple configurations but you can combine a series of parameters to achieve a custom configuration that suits your case.

Next we will define the stub mappings where we define that all requests to “one.my.domain/things” will return an HTTP 200 within the body the result “3” and a similar one for the second case.

@Test

void configures_jvm_proxy_and_enables_browser_proxying() throws Exception {

stubFor(get("/things").withHost(equalTo("one.my.domain")).willReturn(ok("1")));

stubFor(get("/things").withHost(equalTo("two.my.domain")).willReturn(ok("2")));

assertThat(getContent("http://one.my.domain/things"), is("1"));

assertThat(getContent("https://two.my.domain/things"), is("2"));

}

Finally we are asserting that a request to that endpoint will return what we expect, in our case 1 for the first assertion and 2 for the second.

Once you execute this test using:

mvn test -Dtest=ProgrammaticWireMockTest

We get the status back in the output terminal.

[INFO] Tests run: 1, Failures: 0, Errors: 0, Skipped: 0, Time elapsed: 0.275 s - in com.mock.example.StandAloneWireMockTest

[INFO]

[INFO] Results:

[INFO]

[INFO] Tests run: 1, Failures: 0, Errors: 0, Skipped: 0

[INFO]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 2.293 s

[INFO] Finished at: 2022-04-19T18:37:01+01:00

[INFO] ------------------------------------------------------------------------

Mocking possibilities are almost endless using one tool like this one but I want to drop two examples that I see most of the people forgetting when they are testing that are regarding validating faults.

Faults

With WireMock we can simulate a delay in the request and validate that our application still responds after a preset delay. WireMock allows static delays or dynamic ones, please check their documentation to see all the possibilities.

In my case I will define a delay of 2000 milliseconds and check if we still get the response we expected. For that I have put together this method:

@Test

void configures_delay() throws Exception{

stubFor(get("/delayed").withHost(equalTo("my.domain")).willReturn(

aResponse()

.withStatus(200)

.withFixedDelay(2000)

.withBody("Delayed but good!")));

assertThat(getContent("http://my.domain/delayed"), is("Delayed but good!"));

}

That is inserting a fixed delay of 2000 milliseconds and asserting that we still get the answer back.

The second example I want to validate is how will my application behave if an error is returned from the call to a dependency? This time I have defined that if I make a call to the endpoint “/fault” I would get a response back that is a fault with an empty response.

@Test

void configures_fault() throws Exception{

stubFor(get("/fault").withHost(equalTo("my.domain"))

.willReturn(aResponse().withFault(Fault.EMPTY_RESPONSE)));

try {

getContent("http://my.domain/fault");

} catch (Exception e) {

assertThat(e.getMessage(), is("The target server failed to respond"));

}

}

In this case the validation is a little bit more tricky as the call will trigger an exception that we must cough and validate that is the exception we are expecting.

The last example I want to let here is the possibility to define scenarios, this is handy when we want different answers in sequential requests to simulate a behavior, for example if we want to simulate an insertion in a bag of one product and validate that the bag has one product inserted. Without scenarios the mock server will always provide the same answer to sequential requests.

States

To solve this, WireMock has the possibility to define scenarios that are essentially state machines. The starting state is always defined by “Scenario.STARTED”, then we will define the next state by using the method “willSetStateTo()”.

Let’s look at an example.

@Test

public void toDoListScenario() {

stubFor(get("/bag/add").withHost(equalTo("my.domain")).inScenario("Add to bag")

.whenScenarioStateIs(STARTED)

.willReturn(aResponse()

.withBody("<items>" +

" <item>Watch</item>" +

"</items>")));

stubFor(post("/bag/add").withHost(equalTo("my.domain")).inScenario("Add to bag")

.whenScenarioStateIs(STARTED)

.withRequestBody(containing("Shirt"))

.willReturn(aResponse().withStatus(201))

.willSetStateTo("Shirt added"));

stubFor(get("/bag/add").withHost(equalTo("my.domain")).inScenario("Add to bag")

.whenScenarioStateIs("Shirt added")

.willReturn(aResponse()

.withBody("<items>" +

" <item>Watch</item>" +

" <item>Shirt</item>" +

"</items>")));

String result = "";

try {

result = getContent("http://my.domain/bag/add");

} catch (Exception e) {

System.out.println(result);

}

assertThat(result, containsString("Watch"));

assertThat(result, not(containsString("Shirt")));

String response = "";

try{

response = postContent("http://my.domain/bag/add", "Shirt");

}catch(Exception e){

System.out.println("Post Error: " + e.getMessage());

}

assertThat(response, is(201));

try{

result = getContent("http://my.domain/bag/add");

}catch (Exception e) {

}

assertThat(result, containsString("Watch"));

assertThat(result, containsString("Shirt"));

}

In here the first GET request will return a list with one item in it, a Watch, the next request will be a POST to insert another item, a Shirt, into the list and finally we will do a GET request to validate that the list returned have the three items.

This is achieved with MockServer using states, we can see below the initial state of the application defined by

stubFor(get("/bag/add").withHost(equalTo("my.domain")).inScenario("Add to bag")

.whenScenarioStateIs(STARTED)

.willReturn(aResponse()

.withBody("<items>" +

" <item>Watch</item>" +

"</items>")));

We actually have two states defined with the STARTED keyword, this is only possible because we have two types of requests, one GET and one POST, that allows MockServer to know which to use in each situation.

Once MockServer serves the answer to the POSt request it will change to the next state defined, that in our case is defined by “.willSetStateTo(“Shirt added”));”, so any subsequent request will search for the state “Shirt added”.

That state is defined in our code here:

stubFor(get("/bag/add").withHost(equalTo("my.domain")).inScenario("Add to bag")

.whenScenarioStateIs("Shirt added")

.willReturn(aResponse()

.withBody("<items>" +

" <item>Watch</item>" +

" <item>Shirt</item>" +

"</items>")));

Defining that it will answer a items list to the GET request.

Standalone

The other option provided by this tool is to run as standalone, this can be powerful if you do not want to specify the logic of the requests in the code and instead focus your automation code in testing only. It is also risky if you are using a single server for multiple tests because you have the risk of consuming each other’s stubs and generating errors in the tests.

The other challenge is that you need to upload the stub mappings before the test execution and in each environment that you need it, but in my experience, this can be done in the deployment logic in a mock environment where we upload the stub mappings, start the WireMock server and set the endpoint of your application to the WireMock server.

In order to start the WireMock server you need to download the jar file and execute the following command:

java -jar wiremock-jre8-standalone-2.32.0.jar

There are a lot of other configuration options but this will start up a mock server and serve the mappings present in the “mappings” directory.

So I started by defining two stub mappings in two separate files in order to have the expected results, the first one will return the response holding a “2” when we perform a request to “/two/things”:

{

"request": {

"method": "GET",

"url": "/two/things"

},

"response": {

"status": 200,

"body": "2"

}

}

And a second one that will return the response holding a “1” when we perform a request to “/one/things”:

{

"request": {

"method": "GET",

"url": "/one/things"

},

"response": {

"status": 200,

"body": "1"

}

}

Now I just have to define a test that will exercise that endpoint and validate that I get the expected results, notice that now I do not have any setup or logica regarding the WireMock server in the testing code.

@Test

void configures_jvm_proxy_and_enables_browser_proxying() throws Exception {

assertThat(getContent("http://localhost:8080/one/things"), is("1"));

assertThat(getContent("http://localhost:8080/two/things"), is("2"));

}

private String getContent(String url) throws Exception {

try (CloseableHttpResponse response = client.execute(new HttpGet(url))) {

return EntityUtils.toString(response.getEntity());

}

}

Once the code is done we can execute it by running the following command:

mvn test -Dtest=StandAloneWireMockTest

This will execute the tests and generate an output to the terminal showing the overall result of the test.

Future

Take mocking to the next level, like the API first approach that we hear from the API world. You must make sure that your application or service works as expected alone, even before thinking in system tests where you will do e2e tests, these tests will allow you to validate all the use cases where you mock all other services.

After the conception of a service the first action could be to create a mock instance of it and make it available for other services that want to integrate with it to start working, this mock of the service will allow the definitions of expectations so that you can the interactions even before the service is fully developed. This will also force the teams to think about how their services will interact with others and commit before hand with that.

This will mean that for each version of the service we will have a version of the service mocked and templates for the expectations available for everyone, this in time will bring two issues: a maintenance issue and an availability issue that the teams must tackle.

Going down this road you must define a process where each team produce these mocked services properly tagged with versions and with templates that can be used by other teams to produce expectations. Make sure these mocks are available in a central place where all the teams can retrieve them and use them.

Conclusion

Mocking is definitely something to be considered not only for testing but also as a development strategy to reduce dependencies between teams.

Sure there can be a risk of deploying something that is aligned with an old version of the mocked service but this is not a problem of the mock itself, but of how you are using this strategy in your company. You must ask yourself why did it happen and how can we prevent it in the future? There are strategies that can be applied to prevent this but again it is not something a lonely team can implement, to take truly the advantage of mocking all teams must align with the same strategy and usage.

The other risk that most people talk about is that we may be missing some integration validations by mocking because it is impossible to think of all possible scenarios to mock, like testing it is impossible to mock everything. Bare with me, if we have all teams making sure their application behaves normally by mocking dependencies and validating all scenarios that we value, when integrating with one another we can be sure that if an issue raises it is either not an issue in our application or, if it is, we can add that scenario to the mocks. Either way, it allows us to focus when searching for reasons for failure.

Mock it is then, give it a shot!

See you in my next article ;)